HDFS

HDFS is a distributed file system that handles large data sets running on commodity hardware. It is used to scale a single Apache Hadoop cluster to hundreds (and even thousands) of nodes. HDFS is one of the major components of Apache Hadoop, the others being MapReduce and YARN. HDFS should not be confused with or replaced by Apache HBase, which is a column-oriented non-relational database management system that sits on top of HDFS and can better support real-time data needs with its in-memory processing engine.

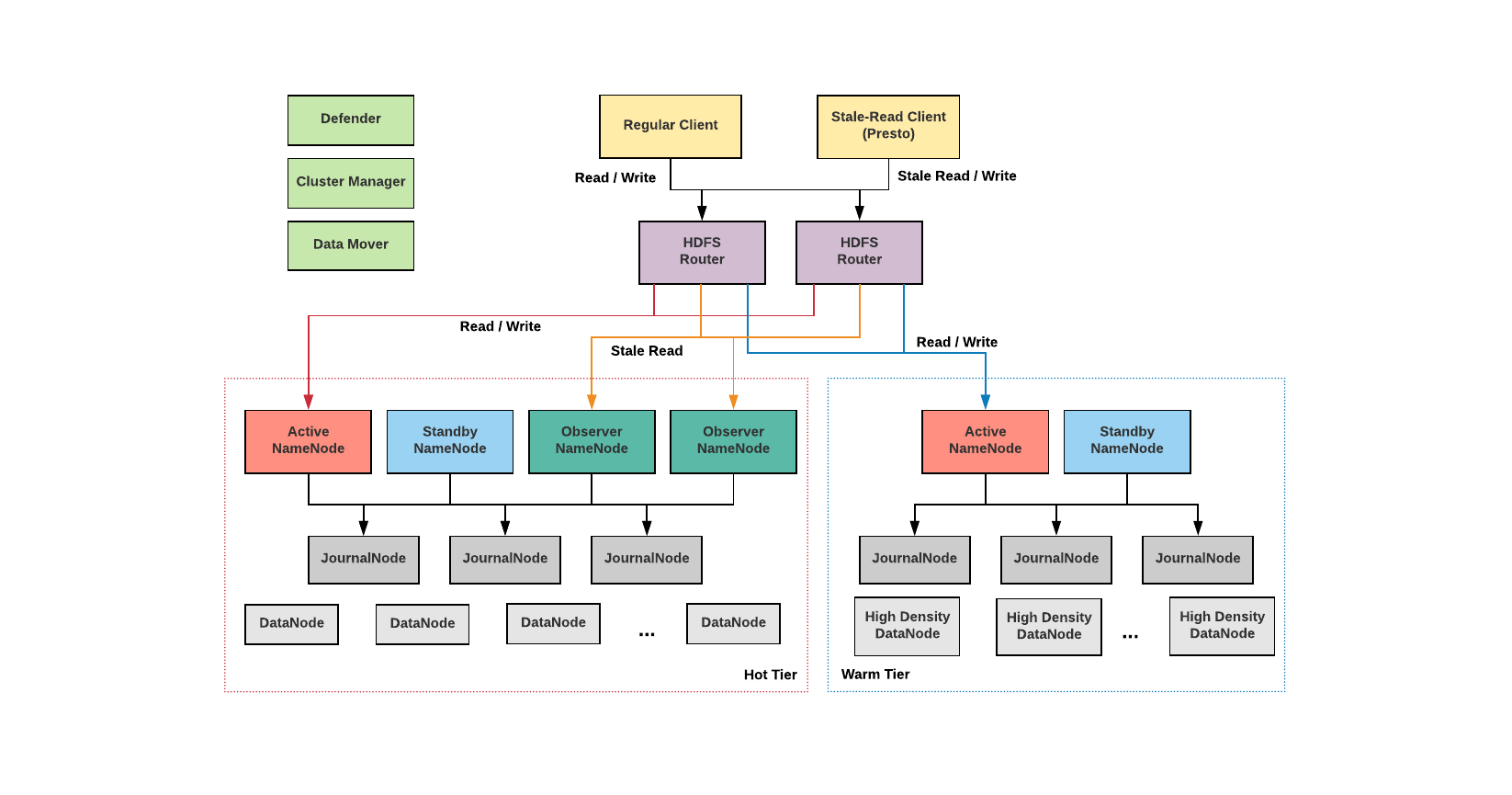

The Hadoop Distributed File System (HDFS) is the primary data storage system used by Hadoop applications. HDFS employs a NameNode and DataNode architecture to implement a distributed file system that provides high-performance access to data across highly scalable Hadoop clusters.

Hadoop itself is an open source distributed processing framework that manages data processing and storage for big data applications. HDFS is a key part of the many Hadoop ecosystem technologies. It provides a reliable means for managing pools of big data and supporting related big data analytics applications.

How does HDFS work?

HDFS enables the rapid transfer of data between compute nodes. At its outset, it was closely coupled with MapReduce, a framework for data processing that filters and divides up work among the nodes in a cluster, and it organizes and condenses the results into a cohesive answer to a query. Similarly, when HDFS takes in data, it breaks the information down into separate blocks and distributes them to different nodes in a cluster.With HDFS, data is written on the server once, and read and reused numerous times after that. HDFS has a primary NameNode, which keeps track of where file data is kept in the cluster.

HDFS also has multiple DataNodes on a commodity hardware cluster -- typically one per node in a cluster. The DataNodes are generally organized within the same rack in the data center. Data is broken down into separate blocks and distributed among the various DataNodes for storage. Blocks are also replicated across nodes, enabling highly efficient parallel processing.

The NameNode knows which DataNode contains which blocks and where the DataNodes reside within the machine cluster. The NameNode also manages access to the files, including reads, writes, creates, deletes and the data block replication across the DataNodes.

The NameNode operates in conjunction with the DataNodes. As a result, the cluster can dynamically adapt to server capacity demand in real time by adding or subtracting nodes as necessary.

The DataNodes are in constant communication with the NameNode to determine if the DataNodes need to complete specific tasks. Consequently, the NameNode is always aware of the status of each DataNode. If the NameNode realizes that one DataNode isn't working properly, it can immediately reassign that DataNode's task to a different node containing the same data block. DataNodes also communicate with each other, which enables them to cooperate during normal file operations.

Moreover, the HDFS is designed to be highly fault-tolerant. The file system replicates -- or copies -- each piece of data multiple times and distributes the copies to individual nodes, placing at least one copy on a different server rack than the other copies.

HDFS architecture, NameNodes and DataNodes HDFS uses a primary/secondary architecture. The HDFS cluster's NameNode is the primary server that manages the file system namespace and controls client access to files. As the central component of the Hadoop Distributed File System, the NameNode maintains and manages the file system namespace and provides clients with the right access permissions. The system's DataNodes manage the storage that's attached to the nodes they run on.

HDFS exposes a file system namespace and enables user data to be stored in files. A file is split into one or more of the blocks that are stored in a set of DataNodes. The NameNode performs file system namespace operations, including opening, closing and renaming files and directories. The NameNode also governs the mapping of blocks to the DataNodes. The DataNodes serve read and write requests from the clients of the file system. In addition, they perform block creation, deletion and replication when the NameNode instructs them to do so.

HDFS supports a traditional hierarchical file organization. An application or user can create directories and then store files inside these directories. The file system namespace hierarchy is like most other file systems -- a user can create, remove, rename or move files from one directory to another.

The NameNode records any change to the file system namespace or its properties. An application can stipulate the number of replicas of a file that the HDFS should maintain. The NameNode stores the number of copies of a file, called the replication factor of that file.

Features of HDFS

There are several features that make HDFS particularly useful, including:Data replication. This is used to ensure that the data is always available and prevents data loss. For example, when a node crashes or there is a hardware failure, replicated data can be pulled from elsewhere within a cluster, so processing continues while data is recovered. Fault tolerance and reliability. HDFS' ability to replicate file blocks and store them across nodes in a large cluster ensures fault tolerance and reliability. High availability. As mentioned earlier, because of replication across notes, data is available even if the NameNode or a DataNode fails. Scalability. Because HDFS stores data on various nodes in the cluster, as requirements increase, a cluster can scale to hundreds of nodes. High throughput. Because HDFS stores data in a distributed manner, the data can be processed in parallel on a cluster of nodes. This, plus data locality (see next bullet), cut the processing time and enable high throughput. Data locality. With HDFS, computation happens on the DataNodes where the data resides, rather than having the data move to where the computational unit is. By minimizing the distance between the data and the computing process, this approach decreases network congestion and boosts a system's overall throughput. What are the benefits of using HDFS? There are five main advantages to using HDFS, including:

Cost effectiveness. The DataNodes that store the data rely on inexpensive off-the-shelf hardware, which cuts storage costs. Also, because HDFS is open source, there's no licensing fee. Large data set storage. HDFS stores a variety of data of any size -- from megabytes to petabytes -- and in any format, including structured and unstructured data. Fast recovery from hardware failure. HDFS is designed to detect faults and automatically recover on its own. Portability. HDFS is portable across all hardware platforms, and it is compatible with several operating systems, including Windows, Linux and Mac OS/X. Streaming data access. HDFS is built for high data throughput, which is best for access to streaming data. More on big data management Hadoop is an important part of big data management, but it's not the only aspect data managers should know about. Get the full picture:

6 best practices on data governance for big data environments 5 trends driving the big data evolution Hadoop Distributed Files Systems helps tackle big data HDFS use cases and examples The Hadoop Distributed File System emerged at Yahoo as a part of that company's online ad placement and search engine requirements. Like other web-based companies, Yahoo juggled a variety of applications that were accessed by an increasing number of users, who were creating more and more data.

EBay, Facebook, LinkedIn and Twitter are among the companies that used HDFS to underpin big data analytics to address requirements similar to Yahoo's.

HDFS has found use beyond meeting ad serving and search engine requirements. The New York Times used it as part of large-scale image conversions, Media6Degrees for log processing and machine learning, LiveBet for log storage and odds analysis, Joost for session analysis, and Fox Audience Network for log analysis and data mining. HDFS is also at the core of many open source data lakes.

More generally, companies in several industries use HDFS to manage pools of big data, including:

Electric companies. The power industry deploys phasor measurement units (PMUs) throughout their transmission networks to monitor the health of smart grids. These high-speed sensors measure current and voltage by amplitude and phase at selected transmission stations. These companies analyze PMU data to detect system faults in network segments and adjust the grid accordingly. For instance, they might switch to a backup power source or perform a load adjustment. PMU networks clock thousands of records per second, and consequently, power companies can benefit from inexpensive, highly available file systems, such as HDFS. Marketing. Targeted marketing campaigns depend on marketers knowing a lot about their target audiences. Marketers can get this information from several sources, including CRM systems, direct mail responses, point-of-sale systems, Facebook and Twitter. Because much of this data is unstructured, an HDFS cluster is the most cost-effective place to put data before analyzing it. Oil and gas providers. Oil and gas companies deal with a variety of data formats with very large data sets, including videos, 3D earth models and machine sensor data. An HDFS cluster can provide a suitable platform for the big data analytics that's needed. Research. Analyzing data is a key part of research, so, here again, HDFS clusters provide a cost-effective way to store, process and analyze large amounts of data. HDFS data replication Data replication is an important part of the HDFS format as it ensures data remains available if there's a node or hardware failure. As previously mentioned, the data is divided into blocks and replicated across numerous nodes in the cluster. Therefore, when one node goes down, the user can access the data that was on that node from other machines. HDFS maintains the replication process at regular intervals.